Issue 2 | Assessment Scope, “What Abouts,” and Seeing Risk Beyond Your Own History

How to protect your risk assessment scope, avoid the gambler’s fallacy, and keep decisions on track

In This Month's Issue:

📖 Book update: Getting Unstuck

🎯 The Risk Analysis Scope Creep Death Spiral

📄Book Excerpt: Defending Your Scope Against “What Abouts”

🔍 Risk Analysis and the Gambler's Fallacy: Why Your Inside View Isn’t Enough

📚 What I’m Reading: antifragility in risk models, the economics of security failures, and the joy of deep reading

🗂 From the Archives: two posts on probability language and expert disagreement

Hey there,

Welcome to Issue 2! Thanks for continuing this journey with me. This month, I'm tackling a common problem I see in the field: risk assessment scopes that grow and grow and grow until they strangle the very decisions they were meant to support.

Whether you're new to cyber risk quantification or a seasoned practitioner, you've probably felt this pain. Let's fix it.

I'll be speaking at SIRAcon next month, the annual conference hosted by the Society of Information Risk Analysts. It's a model-neutral and vendor-neutral non-profit that advocates for risk quantification in the technology field.

This year's conference is in Boston on September 9-11. Whether you're a beginner or advanced practitioner, there's something for everyone. I'll be discussing how to leverage Generative AI and LLMs to enhance your risk management practice. Hope to see you there!

More on my talk here, and you can learn more and register here.

📖 Book update: Getting Unstuck

My book, "From Heatmaps to Histograms: A Practical Guide to Cyber Risk Quantification," comes out in early 2026. Pre-order links and a release date will come in a few months. I'm about half done with the book, tackling the hardest chapters first. Editing is proving to be the most challenging part and often takes longer than writing the initial draft. This process is a reminder of the famous quote, often attributed to Mark Twain:

“I didn't have time to write a short letter, so I wrote a long one instead.” - Mark Twain

Through this process, I've put an enormous amount of thought into why risk quantification programs fail - and it happens at three distinct levels.

At the individual level, practitioners often become overwhelmed by the apparent complexity, fail to commit to personal learning, or lack the basic statistical thinking required to get started.

At the team level, failures often result from using the wrong tools, applying inadequate techniques, skipping proper team-based training, or attempting to retrofit quantitative concepts onto qualitative frameworks that can't support them.

At the organizational level, programs fail due to poor stakeholder engagement, lack of executive buy-in, or perverse incentives that reward compliance theater over genuine risk reduction.

The root cause behind most of these failures is simple to state, but it's hard to grasp and even harder to devise meaningful solutions.

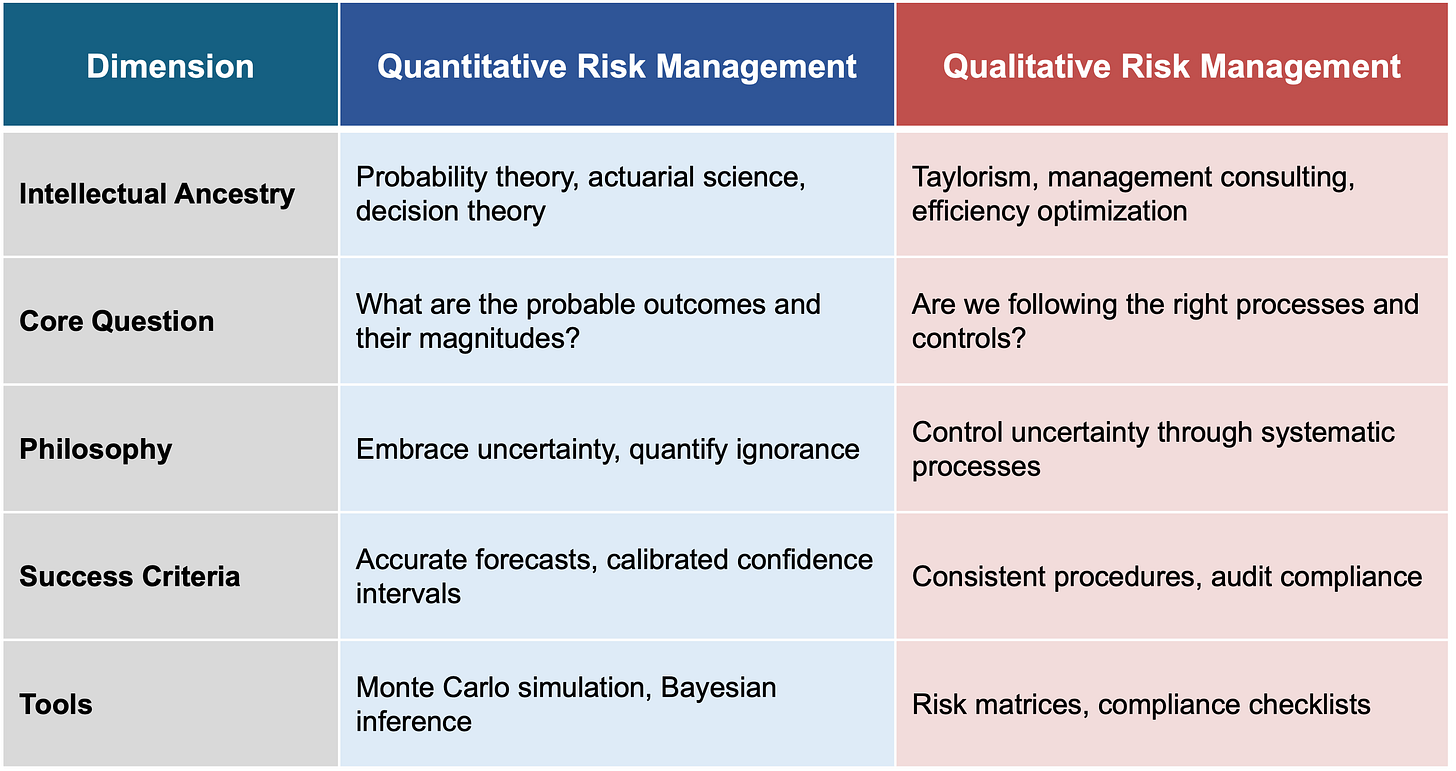

Here's my hypothesis: we've been treating two fundamentally incompatible disciplines as if they're just different flavors of the same thing. Qualitative and quantitative risk management appear side-by-side in textbooks and frameworks, but they come from completely different intellectual traditions: one rooted in 300 years of probability theory, the other emerging from industrial management optimization in the 1970s. They have different philosophies, success criteria, and tools. People get "stuck" when they fail to recognize this divide and attempt to quantify the unquantifiable, such as calculating ROI from heat map movements, using risk scores to allocate budgets, or assuming that moving from red to yellow signifies a measurable reduction in risk.

My goal in the first portion of my book is to help individuals, teams, and organizations get unstuck. Most people don't realize they're trying to blend fundamentally incompatible approaches. Whether you're ready to embrace quantitative methods or need to work within existing qualitative constraints, the path forward starts with recognizing which discipline you're actually practicing; then aligning your tools, metrics, and expectations accordingly.

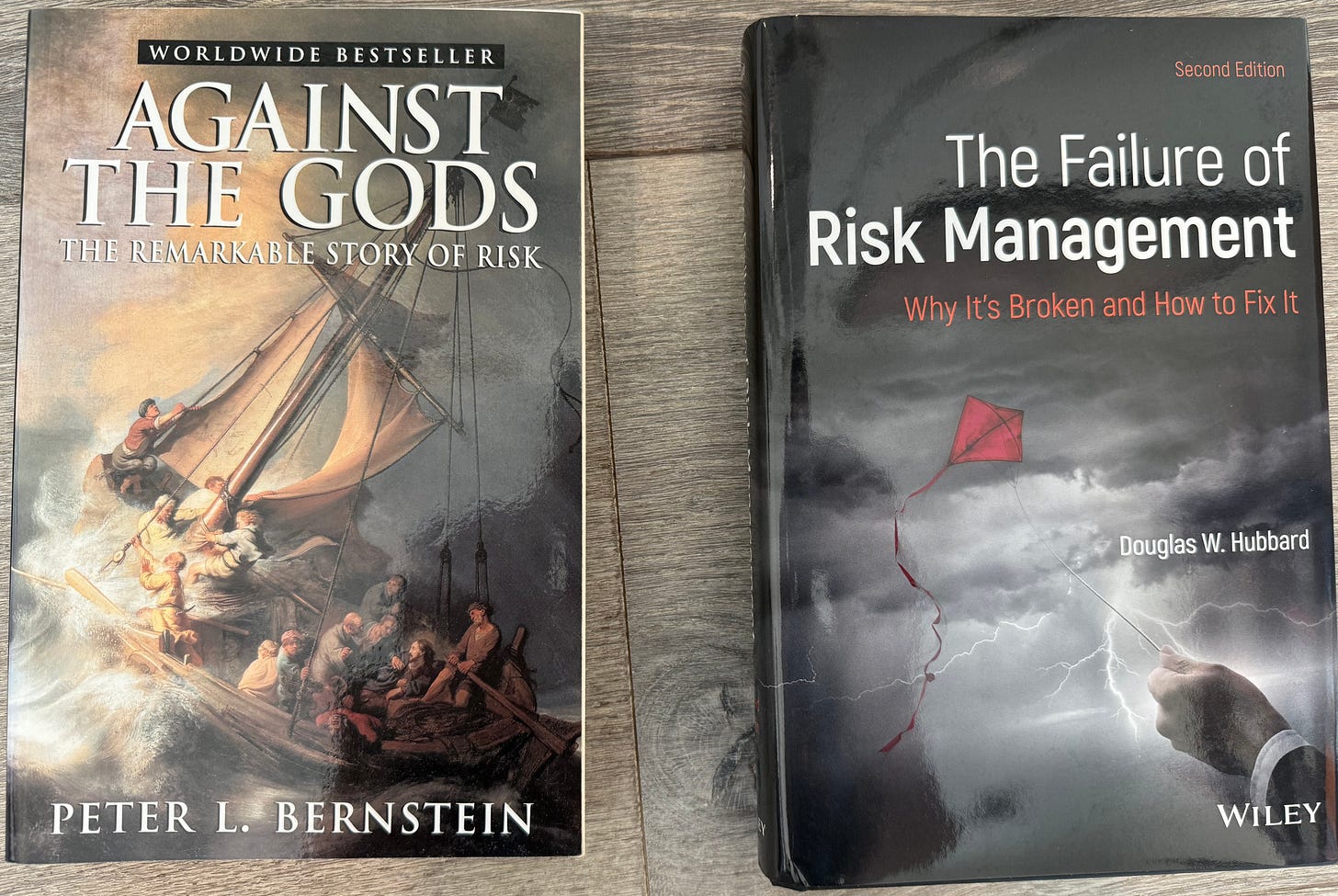

I'll cover this divide in my book, but if you can't wait and want to explore these ideas now, there are two books every risk analyst should read.

Against the Gods: The Remarkable Story of Risk by Peter Bernstein shows you that risk management isn't some modern invention born from compliance frameworks. It's a centuries-old intellectual tradition stretching from Renaissance gamblers to Wall Street quants. Understanding this pedigree helps you see why real risk analysis requires mathematical thinking, not just following an ISO standard or filling out templates.

The Failure of Risk Management: Why It’s Broken and How to Fix It by Douglas Hubbard systematically dismantles the qualitative methods most organizations use, demonstrating with brutal clarity why heat maps and risk matrices don't actually manage risk. He doesn't just critique; he provides a practical path forward, showing how to apply proven quantitative methods to the very problems organizations claim are "unmeasurable."

Together, these books reveal both where we came from and where we need to go. Against the Gods shows the intellectual heritage you're part of when performing a quantitative risk assessment. The Failure of Risk Management shows why most of what passes for "risk management" today is theater, not analysis.

🎯 The Risk Analysis Scope Creep Death Spiral

Lately, I've been thinking a lot about risk analysis scope creep. Earlier in my career as a risk analyst, I didn't have the technical know-how and rhetorical skills to recognize and rein in this common problem. What starts as "let's assess our ransomware exposure" somehow morphs into modeling every conceivable threat from insider trading to asteroid impacts.

This reminds me of those old DirecTV commercials from years back. Here's how one went:

When your cable company keeps you on hold, you get angry. When you get angry, you blow off steam. When you blow off steam, accidents happen. When accidents happen, you get an eye patch. When you get an eye patch, people think you're tough. When people think you're tough, they want to see how tough. And when they want to see how tough, you end up in a roadside ditch.

Don't wake up in a roadside ditch. Get rid of cable and upgrade to DirecTV.

Now, the risk assessment version:

When you start a simple ransomware assessment, you realize you need to model supply chain risk. When you model supply chain risk, you discover fourth-party vendor dependencies. When you discover fourth-party dependencies, you start mapping the entire global semiconductor supply chain. When you map the global semiconductor supply chain, you're analyzing geopolitical tensions in Taiwan. When you're analyzing geopolitical tensions, you're building World War III scenarios. When you're building World War III scenarios, you're calculating the cyber risk impact of nuclear winter.

Don't calculate the cyber risk impact of nuclear winter. Scope your assessment properly.

Why This Matters (Especially for Beginners)

I once watched a 6-month risk assessment die on the vine because it took too long. The team had produced incredible work: sophisticated threat modeling, multiple risk scenarios, and analysis of every vector that stakeholders even casually mentioned in passing. "What about USB drives?" became a multi-page appendix. "Could nation-states target us?" spawned geopolitical risk research.

By the time they delivered their report, the CISO had already allocated next year's budget. The decision they were supposed to inform was made two months prior, without the benefit of a risk analysis.

Risk assessments aren't academic exercises; they're decision support tools operating under real-world constraints. When you lose sight of that, you're not being thorough; you're being counterproductive.

The Warning Signs

Watch for these scope creep red flags:

"While we're at it, let's also look at..."

Risk scenarios requiring multiple independent failures, plus a solar flare

Modeling threats that would require your company to first become 10x more important

Analysis timeframe exceeding the decision timeframe

Stakeholders who've stopped asking when you'll be done

The Antidote

Before you start any risk assessment, get crystal clear on three things:

What decision needs to be made? Not "understand our risk" but "should we invest $X in Y?"

When does that decision need to be made? Work backwards from this date, not forwards from today

What would change their mind? If the answer is "nothing," you're writing a report, not doing risk analysis

Remember: A good-enough assessment delivered in time to influence a decision beats a perfect assessment delivered to the void.

📄Book Excerpt: Defending Your Scope Against “What Abouts”

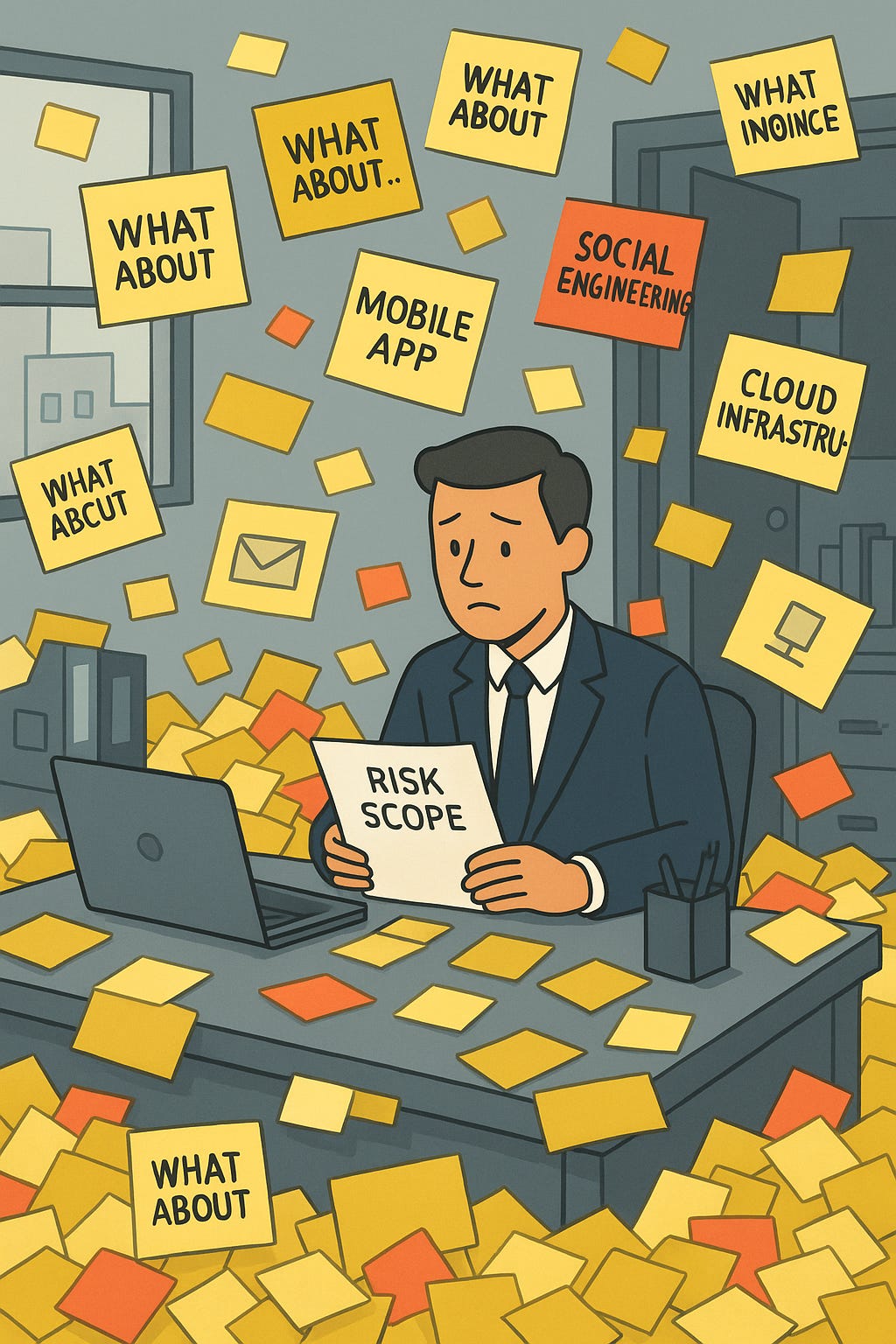

Here's an excerpt from my book that ties in nicely to the previous section on scope creep. I call all these additional requests that can sink an assessment "what abouts." This is from my chapter on risk scenario building:

Be very protective of the in-scope and out-of-scope items in your risk assessment. As you circulate the risk scope to various teams for feedback, you will inevitably receive a flood of "what abouts" - the scope creep requests that arise once people see your risk scenario. It goes something like this:

"What about insider threats? Shouldn't we include those too?"

"What about our mobile app? That has customer data too, right?"

"What about that new ransomware group we read about in the threat intel brief?"

"What about social engineering? That's how most breaches start."

"What about our cloud infrastructure? That could be a risk too."

These are all valid concerns, but your scope doesn't necessarily need to extend to these items. You do have to listen to each and every one, but bring it back to two things: the risk statement and the business decision the assessment will inform. Do the additional what abouts inform those?

The opportunity cost matters: Every additional piece of scope costs something: your time to collect and analyze data, stakeholder time for interviews, potential delays to decision-making, and sometimes real money for external research or tools. Before expanding your scope, ask:

Will this additional analysis change the decision we're trying to make?

Is the cost (time, effort, money, delay) of including this worth the potential insight?

Could the decision-maker act confidently without this extra information?

Does this address the original business concern, or is it just intellectually interesting?

Every hour you spend analyzing tangential risks is an hour not spent on the core question. Every week you delay the assessment while gathering "nice-to-have" data is a week the business operates without the insights it needs.

Remember: The goal isn't to analyze every possible factor of every single risk in the universe. It aims to provide decision-makers with sufficient insight to act confidently on the specific concern that prompted this assessment.

How to respond to scope creep: "That's an interesting point. Let's capture that as a separate risk to analyze later, but for this assessment, we're focused on [restate your original scope] because it directly addresses the [business decision]."

Stay focused, stay decisive, and protect your scope. You can always do another assessment later.

Risk Analysis and the Gambler's Fallacy: Why Your Inside View Isn’t Enough

I’m writing this from the Mandalay Bay in Las Vegas, taking a break from Black Hat. Last night, walking through the casino, I stopped at a roulette table where a small crowd had gathered.

Above the wheel, an electronic board flashed “HOT” and “COLD” numbers, showing the recent winners and the “overdue” losers. Black had hit six times in a row.

A guy next to me slid a stack of chips onto red.

“It’s due,” he told his friend.

The wheel spun. Black again. Seven.

That board is a master class in psychological misdirection. The casino knows exactly how people will read it. They are not offering insight. They are using a bias that keeps people betting.

The Inside View Trap

What I saw was the Gambler’s Fallacy: the belief that past results somehow change the odds in an independent game. In reality, every spin is the same, with eighteen chances for red, eighteen for black, and two for green in an American game. About 47.4 percent either way. The past does not matter.

Daniel Kahneman talks about this in terms of the inside and outside view. The inside view is personal. All the details are right in front of you. Seven blacks in a row - black must be “hot.” The outside view zooms out and looks at the bigger picture. Spins are independent, and the odds have not moved an inch. The outside view starts with what Kahneman and Tversky first described, later expanded by Kahneman and Lovallo, as a “reference class” approach: finding similar past cases to establish a base rate, then adjusting for your own specifics.

In risk work, the inside view feels compelling because it is your data and your history. But without the outside view to anchor it, it can be dangerously misleading.

From Roulette to Cybersecurity

The next day at Black Hat, I overheard someone say, “We haven’t had a major incident in three years. Our security program is clearly working.”

It is the same mental trap. The absence of bad news feels like proof of success. But in cybersecurity, events are not like roulette spins. They are connected in messy ways: shared vulnerabilities across common platforms, attackers reusing tools, exploit kits spreading, supply chains concentrating risk, and defenses that work for a while until attackers shift tactics.

So, unlike roulette, where past spins tell you nothing, in cyber, we should update on external events and peer data, not just our own quiet streak. Three quiet years could mean you have done a great job. It could also mean the attackers were busy elsewhere, or that someone is already inside and you have not found them yet. Sometimes it is just normal variation. Without looking beyond your own history, you cannot improve your forecast about which of these is actually happening.

The Data Blind Spot

This is where loss event frequency estimates often go wrong. If you base them only on your own history, a quiet stretch might make the probability look close to zero. But take the outside view, look at industry data, and you might find that in many circumstances, annual probabilities for material incidents land somewhere in the single-digit to low double-digit range. The exact number depends on your sector, size, and exposure.

Think about SolarWinds or Kaseya. Many companies had no frame of reference for a supply chain compromise of that scale. Their models, built entirely on internal experience, underestimated both the frequency of such events and their potential severity. Well-run risk programs that took the outside view already had this kind of event in their scenarios long before those breaches made headlines. The 2013 Target breach through an HVAC vendor, the 2017 NotPetya attack spread via a compromised accounting software update, and the 2017 CCleaner incident all showed that trusted channels can be turned into attack vectors. Programs built on that foundation could see risks to their company that had never happened within their own walls. Zero incidents in your history do not mean zero incidents in your future.

Mixing the Views

The best risk models start with the outside view: industry data, base rates, and what is happening in the wild. Then they layer in the inside view: your controls, your attack surface, targeting patterns you have seen, and the current threat landscape.

You do not assume the outside view is perfect, and you do not throw away the inside view just because it is biased. You blend them. You keep your ranges wide enough to be honest about uncertainty. And you write down your reasoning so you can revisit it later or change it when new data arrives.

Humility in the Model

Roulette is easy to describe. The odds are fixed, the events are independent, and the math is clean. Cyber risk is the opposite. Events influence each other. The threat landscape changes constantly. The data is incomplete and often noisy.

The teams that get this right do not confuse a lucky streak with a winning strategy. They use their own data but never rely on it alone. They check it against the outside view because “we have never seen it happen” is not the same as “it cannot happen here.”

In risk, as in the casino, betting on patterns that do not exist is the fastest way to lose. The strongest risk analysis programs follow the Three-Source Framework I wrote about in Issue 1 of this newsletter. Start with external data because it gives you the outside view: industry context and base rates that are bigger than your own experience and immune to the false comfort of a quiet streak. Then bring in internal data to ground the analysis in your specific reality, and subject matter expert input to bridge past and future with judgment about current threats, new controls, and changing conditions. Each source has blind spots, but together they create something far more reliable than any single input. Do this consistently, and you will stop betting on luck and start making better, more defensible decisions.

Further reading:

Kahneman, D., & Tversky, A. (1979). Intuitive prediction: Biases and corrective procedures. In Forecasting

Kahneman, D., & Lovallo, D. (1993). Timid choices and bold forecasts: A cognitive perspective on risk taking. Management Science, 39(1), 17–31.

📚 What I'm Reading

I’ve been rereading Antifragile through the lens of cyber risk quantification, asking: what makes a risk model fragile? Taleb’s core idea is that fragility isn’t just about being vulnerable. It’s about being harmed by volatility, randomness, and stressors. Many traditional risk models, especially those built on single-point estimates or assumptions of normality, fall apart when hit with real-world uncertainty.

Taleb is particularly critical of what he calls fragilistas: people who try to control complex systems with overly simplified models, often creating more harm than good. If that sounds familiar in cyber risk management, it should.

In contrast, he argues we should look for systems that benefit from disorder. In cyber risk terms, that means favoring models that expose uncertainty with distributions, adapt to new data, and resist overfitting. The book is dense, digressive, and a bit too confrontational for my comfort, but its insights are powerful. It reminds us that precision without resilience is a liability.

Why Information Security is Hard – An Economic Perspective by Ross Anderson

This paper is over 20 years old, but it’s still one of the most useful things you can read if you're trying to understand why so many security problems persist. Better tools, better talent, and bigger budgets haven’t solved them. Anderson’s core argument is that most security failures aren’t technical; they’re economic.

He explains how misaligned incentives, externalities, and information asymmetries create a world where the people who could fix problems often aren't the ones who suffer from them. It’s a foundational concept in security economics, and it helps explain everything from underinvestment in basic hygiene to why insecure software is still everywhere.

This matters for risk quantification, too. If we’re trying to measure and model risk, we need to understand not just what’s vulnerable, but why it stays that way. Incentives shape outcomes. Without that lens, even the best models will miss the mark.

The topic is still very relevant. I recommend it to anyone who wants to understand and solve real-world security problems.

The Joy of Deep Reading by Rick Howard

I recently went back and read Rick Howard’s July First Principles newsletter on the joy of deep reading, and it hit uncomfortably close to home. I love reading, but I am guilty of skimming far too much in my rush to consume as much as possible each day. Rick makes a compelling case for slowing down, picking one great book, and going deep, really engaging with the material instead of chasing bullet points and AI summaries.

What I loved most was how he blends personal stories, history, and curated recommendations from the CyberCanon project. If you have ever felt the pressure to “keep up” and ended up sacrificing depth for speed, this will remind you why deep reading is worth it.

If you care about cybersecurity, strategy, or just sharpening your thinking, I highly recommend subscribing to Rick’s First Principles newsletter. You will come away with ideas that stick and a reading list that is actually worth your time.

🗂 From the Archives

To keep decisions from drifting, here are two older posts of mine that fix two quiet common failures: fuzzy language and mismatched expert input.

Probability & the words we use: why it matters

I wrote a while back about how words like likely, high chance, or probably can completely derail a risk discussion. The CIA and NATO have both done research showing that people interpret these words in wildly different ways, sometimes by 40 percentage points or more.

You see this all the time in boardrooms, forecasts, and risk reports. Everyone thinks they’re on the same page, but they’re not.

The fix? Stop thinking like a storyteller and start thinking like a weather forecaster. Put numbers on it. High likelihood becomes 70% chance. Now it’s clear, and you can actually make a decision with it.

When Experts Disagree in Risk Analysis

Ever had one expert give you a risk estimate that’s way outside what everyone else is saying? It’s more common than you might think, and it’s worth understanding why before you throw their number out.

From what I’ve seen, it usually comes down to one of four things:

They’re not calibrated

They misunderstood the question

They have a different worldview

They know something the others don’t

Sometimes it’s noise, but sometimes it’s the most important data point in the room. The only way to know is to dig in, ask follow-up questions, and be honest about what you find.

If you work with SMEs in your modeling or forecasts, this is one to bookmark.

✉️ Contact

Have a question about scoping risk assessments? Here's how to reach me:

Reply to this newsletter if reading via email

Comment below

Connect with me on LinkedIn

❤️ How You Can Help

✅ Share your scope creep horror stories - they might become examples in the book

✅ Forward this to a colleague who's stuck in analysis paralysis

✅ Click the ❤️ if this helped you escape a scope death spiral

Thanks for reading, and remember: the perfect risk assessment that never gets finished is infinitely less valuable than the good one that informs a decision this week.

—Tony

Here is an interesting dilemma on cyber risk quantification. You need both internal and external data to get to a high-fidelity model.

- Internal data to understand assets at risk and get visibility into controls in place.

- External data to inform the model with attacks and incidents (frequency, severities, TTPs, emerging techniques).

The information required is all there, but it sits in silos. Here is the challenge: it's a business challenge, not a technical one, not a model one:

- Businesses don't want to share their internal cybersecurity insights with outside parties such as insurers, cyber insurtech, and risk quantification companies that need it to inform their models

- Businesses don't have access to the incident and claims databases that insurers own. A few outside and independent entities have good information, but it is not free.

Hello Tony, first of all, thank you so much for this awesome newsletter! Thank you also for the book you're writing—I am really looking forward to reading it. II'll also be joining the SIRACON25 (virtually) so I am looking forward to watching your meeting on AI.

work at a GRC software vendor, and we have built a tool for risk quantification, addressing ERM and ORM types of risks. I am observing that many clients and prospects are very excited about risk quantification, but they continue using the qualitative approach even though they are perfectly aware of its limitations in supporting decision-making, and I am wondering why that is.

So, referring to the initial part of this newsletter where you provide some reasons why risk quantification programs fail, I have thought of two more, and I would love to know your opinion.

The first is not CRQ-specific and concerns the adoption of risk quantification by clients. The majority of organizations still use the qualitative approach, which is highly subjective. As such, when a risk assessor selects a specific level, it's basically impossible that someone will challenge their opinion, since it's subjective.

Conversely, if the assessor uses a quantitative approach, which is all about money (everyone understands money!), as soon as a specific risk exposure value is established, certainly most of the stakeholders will ask the assessor, "Where the hell does that number come from? Are you sure? What's the rationale behind it?"

So, I would argue this is mainly a psychological issue related to liability—that is, if the assessor adopts a quantitative approach to evaluate risk exposure, they will eventually experience significant pressure regarding the assessment decision, and over the long term, this pressure may cause the assessor to refrain from continuing to use that approach.

What do you think about this potential issue?

The second reason is CRQ-specific and, in short, concerns the scalability of the assessment process. Over the past years, I have spoken with multiple companies (mainly multinationals), and all of them claimed they need to assess hundreds, if not thousands, of ICT risk scenarios. Therefore, if they had to conduct assessments using risk quantification, it would take a lot of time—in other words, the process does not scale. A claim like this seems to relate more to the scope creep issue you described in the newsletter, but I'd like to know your opinion on the scalability issue related to ICT risks.

Thanks

Luciano