Issue 5: What You Can't See Can Still Hurt You

How to quantify zero-event risks, emerging threats, and the unimaginable

📖 In This Month’s Issue:

📋 Book update: Scenario building and measurable scope

🧠 Three ways to quantify never-happened risks

📗 Book excerpt: Handling “impossible” scenarios in workshops

✉️ Contact and how you can help

Hey there,

Welcome to Issue 5! If you’ve ever been asked to quantify a risk your organization has never experienced, you know that sinking feeling. No internal data. No precedent. Just a blank Word doc and a decision-maker waiting for an answer.

This issue gives you the framework. It won’t make the work easy, but it will make it possible.

📖 Book Update: The Art of Keeping Scope Tight

I just finished up a rewrite of the scenario-building chapter, and it is turning into one of the most important parts of the entire book. This isn’t about dreaming up dramatic threat stories. It is about creating scenarios that keep stakeholders focused and give analysts something they can actually measure.

A beautifully crafted scenario is worthless if you cannot quantify it. The entire book builds from this foundation. Once we define a clear, measurable scenario, the next chapters show exactly how to estimate each component.

This is where risk analysis turns from speculation into numbers that support real decisions.

A Cover, A Reviewer, and Some Gratitude

Last week I spent a few hours going through cover image options with the publisher, and we picked a great one. I can’t share it yet, but I love it!

I’m incredibly grateful for the team at Apress for all their support throughout this process. Special thanks to Robert Brown, who is serving as the book’s technical reviewer. You might know him from his fantastic book, Business Statistics with R. He’s been an invaluable sanity check on everything I’m writing.

The Post-it Note Philosophy

I posted this on LinkedIn a few weeks ago, and it encapsulates my philosophy behind my writing process.

There’s a Post-it note on my monitor that says: “Don’t melt the reader’s brain.”

This simple reminder drives every page I write in my new book on cyber risk quantification.

Einstein said it best: “If you can’t explain it simply, you don’t understand it well enough.” In cybersecurity risk, we’ve become so accustomed to complexity that we’ve forgotten this fundamental truth.

My book “From Heatmaps to Histograms” takes the Lego block approach:

→ Start with fundamentals (coin flips, basic probability)

→ Build one concept on the last

→ No brain-melting allowed

I’ve found that if you break complex ideas into small enough pieces, anyone can understand them. I learned this the hard way, struggling through textbooks at my kitchen counter until probability finally clicked.

Writing this book taught me that the biggest breakthrough in cyber risk isn’t more sophisticated math. It’s making quantitative thinking accessible to practitioners who need it most.

I’m constantly looking at that Post-it note, reminding myself who I’m writing for: it’s me 15 years ago, and everyone else who’s been there too.

🧠 How to Quantify What You’ve Never Seen: A Taxonomy of Unknown Risks

A Practical Taxonomy for Risks We Haven’t Lived Yet

Most risk programs live comfortably inside the obvious: phishing, ransomware, and BEC. But what about the risks that haven’t happened to you, or to anyone? This post explores how to think and model at the edge of experience, where data ends.

This idea surfaced while I was writing the risk scenario chapter of my book. That chapter focuses on the obvious: phishing, ransomware, business email compromise, the kinds of risks that, in most organizations, happen most often.

But as I wrote, a question kept lingering in the margins. What about the other kinds of risk, the ones that haven’t happened yet? Do we have a place for them?

We do, but it’s harder to get there. That’s the edge of scenario thinking, where imagination meets quantification and where the data ends, but the analysis doesn’t.

Every risk analyst eventually faces the same uncomfortable question: How do we quantify a risk that’s never happened to us?

It’s one of the most complex problems in our field because it exposes a truth we don’t often say out loud. We’re expected to be rigorous and data-driven, yet much of our work extends beyond firsthand experience.

We model what we’ve seen: incident tickets, logs, audit findings, but we also have to model what we haven’t seen: a supply chain attack that’s hit peers but not us, an AI-driven threat that hasn’t materialized yet, or a failure type no one has imagined.

These aren’t the same analytical problem. Treating them as if they are is where many risk programs go wrong. A risk that’s happened to others but not to you demands a very different approach than one no one has experienced, which again differs from one no one can even conceive of.

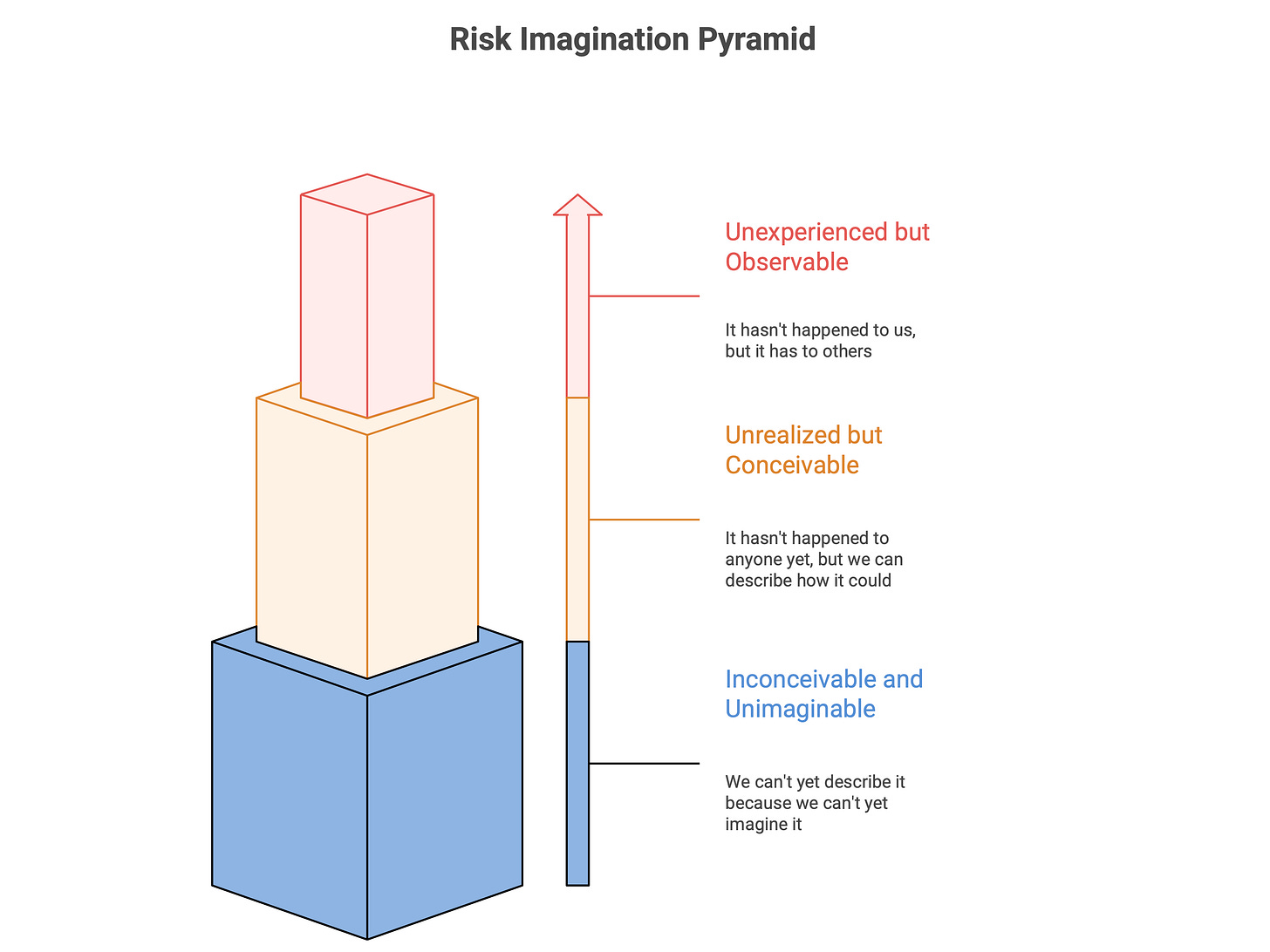

Through experience, I’ve come to see these as three distinct levels of “never happened” risk, each requiring its own mindset and method.

The Three Levels of “Never Happened” Risk

1. Unexperienced but Observable

(It hasn’t happened to us, but it has to others.)

This is the most common and the easiest to quantify. These are the zero-event risks in your organization, scenarios that exist elsewhere in the world but haven’t touched you yet.

Your stance here is empirical. Treat the world as your dataset. Pull base rates from peers, incident databases, or industry research. Adjust for your own context, such as size, control maturity, and exposure, and you can build a defensible range.

When SolarWinds was disclosed in 2020, many companies hadn’t been hit but were clearly exposed. We built models using published incident counts, vendor integration data, and internal telemetry to estimate local probability and impact. The result wasn’t perfect, but it was grounded in evidence.

The mistake is assuming that because it hasn’t happened here, it can’t happen here.

Key mindset: the absence of local evidence doesn’t mean the risk is zero.

2. Unrealized but Conceivable

(It hasn’t happened to anyone yet, but we can describe how it could.)

This is the analytical frontier. Plausible, but unobserved. Examples include quantum decryption of current algorithms or AI-generated polymorphic malware that learns as it attacks.

Your stance here is analogical. You reason by pattern and proximity. Look at adjacent technologies or transitions: how quickly did similar systems in the past move from secure to compromised? Use expert elicitation to fill data gaps, but structure it using calibrated ranges rather than gut-check opinions.

In 2013, I modeled the risk of blockchain wallet compromise before any major exchange hacks had occurred. I used online banking fraud frequencies as a proxy, then adjusted for immaturity and control gaps. A year later, the first major breaches confirmed the model's direction.

The trap here is cherry-picking analogies that confirm fear or bias. The antidote is breadth: collect multiple comparables, build a distribution, and reason from the middle.

Key mindset: analogical reasoning isn’t guessing; it’s disciplined extrapolation.

3. Inconceivable and Unimaginable

(We can’t yet describe it because we can’t yet imagine it.)

This is the domain of true surprise, the “how did no one see this coming?” events. Before 9/11, few imagined coordinated hijackings as guided missiles. Before Stuxnet, few imagined air-gapped industrial systems being sabotaged via USB. This is the core subject of Taleb’s book, The Black Swan.

You can’t model the probability of something you can’t conceive, but you can model your resilience to it.

Your stance here is architectural. Focus on designing systems that can absorb shocks and adapt. Build redundancy, strengthen detection, and practice incident response. Model impact categories rather than specific causes, such as availability, integrity, confidentiality, productivity, fines, and reputation.

When you can’t quantify the odds, quantify your capacity to recover.

Key mindset: when you reach the edge of prediction, move to resilience.

Universal Lessons

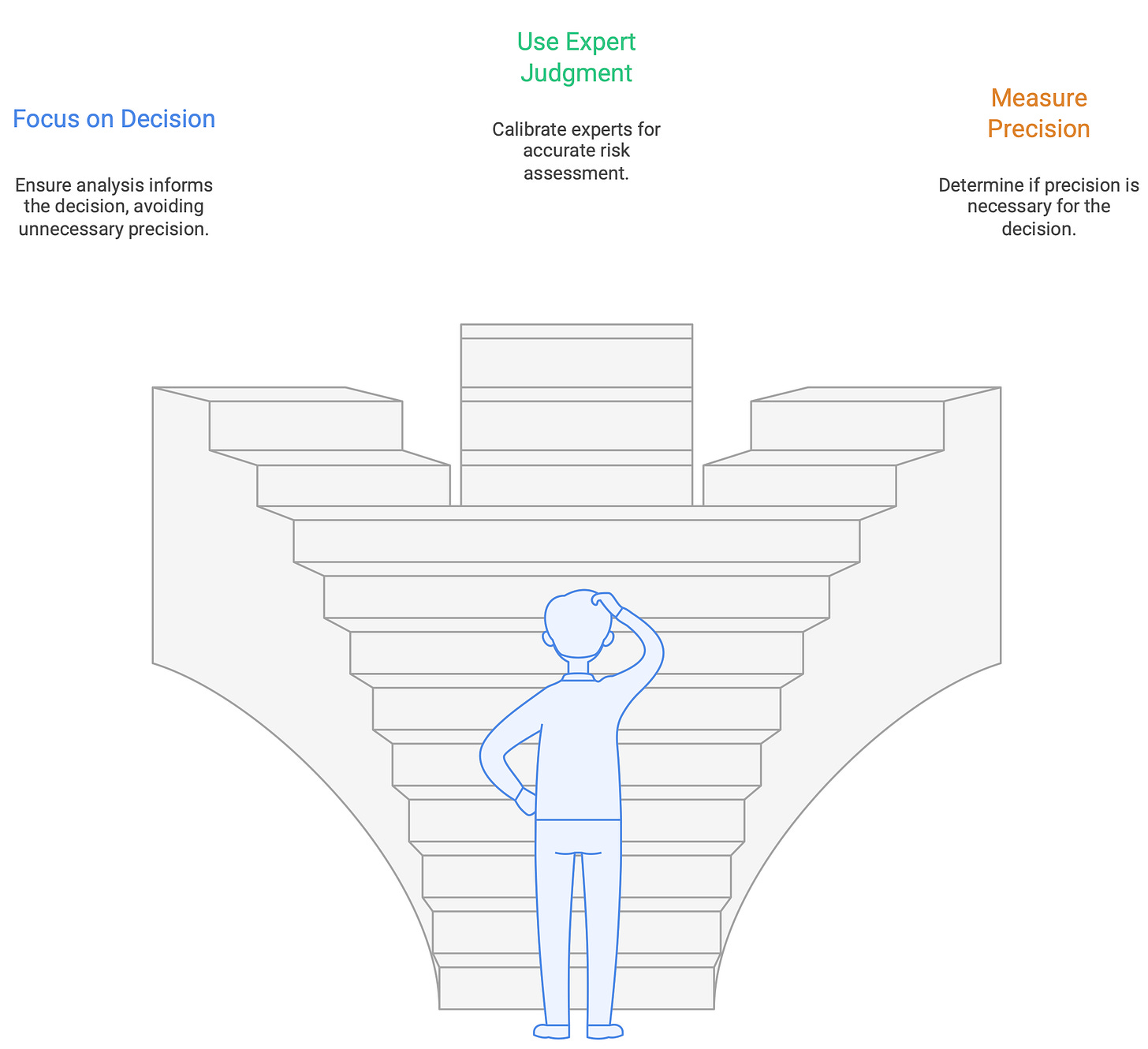

No matter which level of “never happened” risk you’re dealing with, three principles hold true.

Start with the decision. Know what choice the analysis is meant to inform. If the decision wouldn’t change whether the probability is 0.1% or 1%, stop polishing decimals.

Use structured expert judgment. Calibrate experts with seed questions, capture ranges (P5, P50, P95), and reward accuracy, not seniority.

Measure when more precision matters. If perfect information wouldn’t change the decision, it’s not worth chasing.

The Deeper Lesson

The most dangerous risks aren’t always the unimaginable ones. They’re the obvious ones we’ve seen elsewhere, but we convince ourselves that they don’t apply.

What makes great risk analysis isn’t omniscience, it’s clarity. Knowing what kind of “never happened” risk you’re facing tells you which tools will work and which will waste time.

Quantification doesn’t end where experience stops. It just changes form.

📗 Book Excerpt: The Philosophy of “Impossible” Risk Scenarios

“You just said a tsunami can’t destroy a datacenter in Omaha. What about a mega-tsunami?”

If you have ever run a risk brainstorming workshop, you know how quickly the conversation can drift into philosophical debates about what is truly possible.

I’ve been in this meeting many times. We're talking about website outage scenarios, and suddenly, we are discussing asteroid impacts and mega-tsunamis instead of the actual decisions a CISO needs to make next quarter.

This piece is about getting the room back on track. It explains why we bracket remote scenarios and focus our limited time and attention where it matters. I ended up cutting and condensing this section for the chapter, but it stands well on its own. I will share a couple of the other trimmed pieces in the next issue.

This pushback on absolute claims is philosophically valid. When analysts say something is “impossible,” they’re not claiming logical impossibility (like a square circle) or even physical impossibility (like faster-than-light travel).

They’re talking about practical impossibility: scenarios so remote that modeling them would waste resources better spent on actionable risks. This connects to practical reason: cognitive and analytical resources are finite, so analysts must focus on possibilities that could meaningfully inform decisions.

As philosopher William James noted, beliefs should be judged by their practical consequences. A “mega-tsunami reaching Omaha” scenario might be theoretically possible via Earth-altering events (e.g., planetary impact, complete crust displacement) but it fails the pragmatic test: it won’t help a CISO allocate next year’s security budget.

Risk analysis is an exercise in practical wisdom (what Aristotle called phronesis), not exhaustive enumeration of every conceivable threat. Analysts set aside (called bracketing) extremely remote possibilities not because they can prove they’re impossible, but because doing so helps them focus on scenarios that actually matter for business decisions.

📚 What I’m Reading

Curiosity Didn’t Kill the Cat by Erin Eilers

A reminder that great risk identification starts with great questions. AI can surface patterns, but human curiosity reveals the blind spots.

Outside In and Inside Out Superforecasting by Rick Howard

A great reminder that even strong models need human judgment. Forecasting is not about precision for its own sake. It is about making decisions with the uncertainty we have.

✉️ Contact

Have a question about risk analysis, or have a general question? Here’s how to contact me:

Reply to this newsletter if you received it via email

Comment below

Connect on LinkedIn

What specific risk analysis challenges are you facing? Hit reply and let me know.

❤️ How You Can Help

✅ Tell me what topics you want covered: beginner, advanced, tools, AI use, anything

✅ Forward this to a colleague who’s curious about CRQ

✅ Click the ❤️ or comment if you found this useful

If someone forwarded this to you, please subscribe to get future issues.

— Tony

I have really enjoyed and been challenged by this series - really look forward to the book. Thank you.