Issue 7: The Risk Workshop Survival Guide

How to keep risk workshops from drifting into philosophy, edge cases, and noise

In This Issue:

📖 Book Update: The Manuscript Is Done

🔨 The Risk Workshop Survival Guide

👓 What I’m reading

❓Reader Question

📖 Book Update: The Manuscript Is Done

I crossed a big finish line earlier this week, and it still feels a little unreal to say it out loud: the manuscript is officially complete. After living with this book every day for months, in outlines, in messy notes, in drafts that did not survive the edit, there was a strange mix of relief and momentum when I typed the final line. The work is done, and somehow things are moving even faster now.

The manuscript also went through technical review by Robert D. Brown III, one of the sharpest thinkers in decision science. Working with Rob strengthened the book in ways I did not expect. Some of his comments made me laugh and wonder what I was thinking when I first drafted a chapter; others made me rethink how I explain key ideas so they land cleanly for readers. It was the kind of collaboration that leaves the work stronger and leaves you a better thinker than when you started.

From pre-orders alone, From Heatmaps to Histograms hit #1 in Amazon’s Network Security category.

Next up is copyediting, then printing, then launch. Ok, it’s real now.

🎉 Preorders are open for From Heatmaps to Histograms: A Practical Guide to Cyber Risk Quantification.

(Apress / Springer Nature, publishing March 2026)

If you have been following this journey, preorders help more than you might think. They signal demand to the publisher and the retailers.

🔗 The book’s official website

👉 Amazon preorder link

The Risk Workshop Survival Guide

A risk workshop exists for a simple reason: before you can analyze risk, you have to decide which risks are worth analyzing. Its job is to surface candidate scenarios, align on what matters, and provide structure to uncertainty before anyone starts modeling.

When it works, a risk workshop creates shared understanding. People leave with a clearer sense of what could happen, why it matters, and where analysis would inform a decision.

I have hosted more of these sessions than I can count. Over time, I learned that the difficult part is not the modeling that comes later, but the conversation in the room. If no one actively takes responsibility for the shape of that conversation, it will drift toward whatever is most interesting, provocative, or philosophically defensible in the moment.

That is how otherwise well-intentioned workshops end up debating nation-state-sponsored supply chain attacks and mega tsunamis in Nebraska.

These are the moves I have learned, often the hard way, to keep risk workshops focused and productive.

Survival Skill 1

Eliminate the impossible

After a workshop or GenAI brainstorm, you often end up with a very healthy list of risk statements. Sometimes too healthy. I have seen sessions produce fifty statements for a single asset class. No analyst is going to run fifty full assessments, nor should they.

Before you invest hours in scoping, run a quick quality check. Look at each risk statement and ask:

“Could this actually happen in the real world, given how the world works now?”

Some scenarios are impossible in the sense that matters for our work.

A hurricane cannot be the cause of a data breach via SQL injection.

A tsunami cannot destroy a datacenter in Omaha.

Natural disasters do not directly exploit technical vulnerabilities.

This first pass should be quick. You are not assigning probability. You are filtering reality from noise.

Survival Skill 2

Handle “impossible” pushback with philosophy

There is always someone who will ask, “But what if you are wrong?”

Fair question. When analysts say “impossible,” we do not mean logically impossible, like a square circle, or physically impossible, like faster than light travel. We mean practically impossible: so far outside the range of the world we live in that modeling it would not help anyone make a decision.

Practical impossibility is about stewardship of attention. We focus on possibilities that can inform decisions within the constraints of the next quarter, next year, or the current budget cycle. As William James put it, beliefs are judged by their practical consequences. A mega tsunami reaching Omaha does not help a CISO allocate next year’s security budget.

Risk analysis is an exercise in practical wisdom. We bracket extreme theoretical scenarios not because we can prove they will never happen, but because including them makes our analysis less useful.

Survival Skill 3

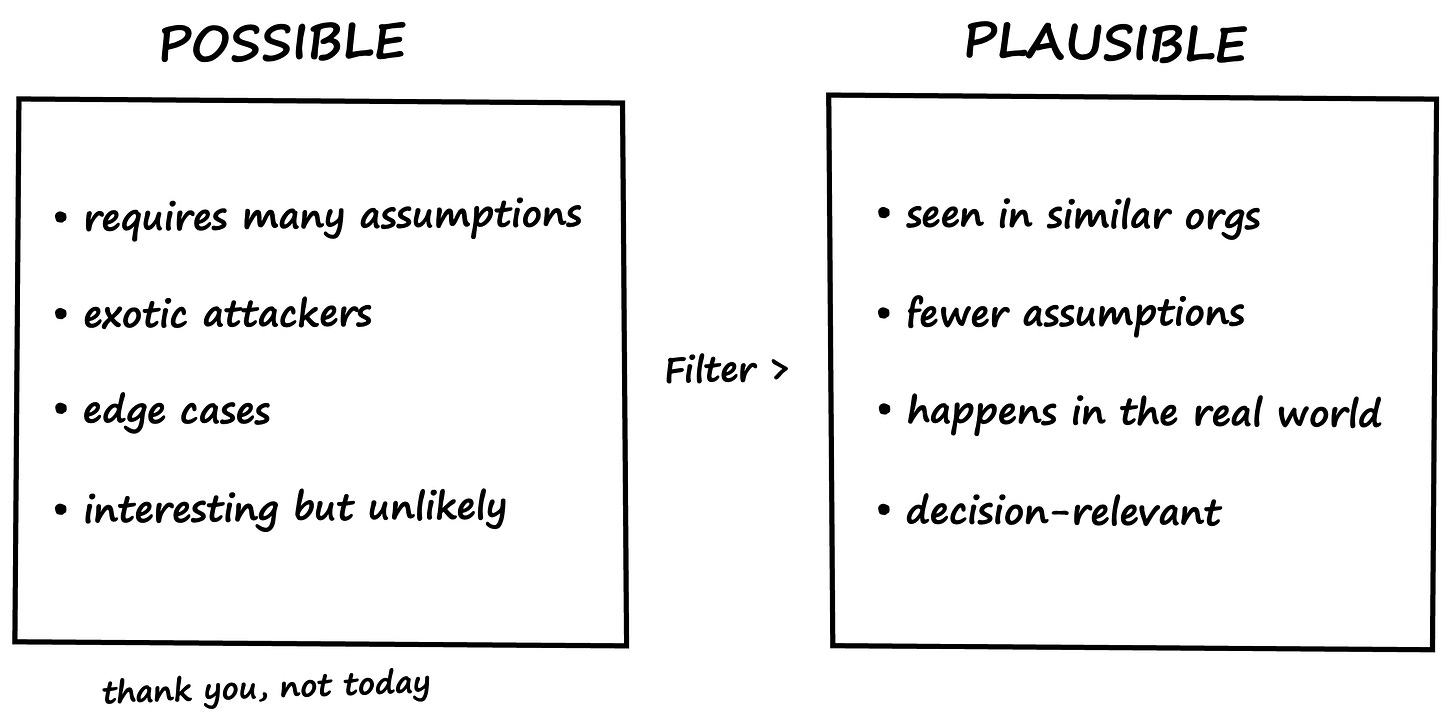

Prioritize plausible before possible

Now you are left with things that could happen. The trick is choosing which ones deserve your time.

Consider two data breach scenarios:

A: Cybercriminals exploit an unpatched web application vulnerability.

B: A nation-state deploys a custom zero-day against proprietary middleware.

Both are possible. The question is which is more plausible for your organization.

Unless you are a defense contractor, Scenario A is usually the better use of time. It requires fewer special conditions and matches patterns we see in actual incident reports. Scenario B is not wrong. It is simply a poorer investment if you are trying to understand your most likely risks.

Survival Skill 4

Use Ockham’s Razor before you scope

When choosing between scenarios that could happen, ask:

Which happens more often in organizations like ours

Which requires fewer unusual circumstances

Which a reasonable security professional would worry about first

This is Ockham’s Razor applied to risk analysis. Favor the explanation that makes the fewest assumptions. If a scenario requires multiple unlikely events to align just right or assumes sophisticated attackers for a very typical target, it probably belongs lower on your list. You are not trying to impress anyone with complexity. You are trying to be useful.

(Note: I wrote more about Ockham’s Razor on my blog.)

Survival Skill 5

Correct for “cool threat” bias

Security teams are uniquely talented at jumping straight to the sophisticated edge case. It is part of the job and part of the fun. But many of these scenarios are the cybersecurity equivalent of an espionage thriller. They look great on the whiteboard, but they are rarely what hurts us.

If the room is stuck, pull up your own incident logs or skim the Verizon DBIR. In most organizations, the most likely threat is not an APT. It is Sam from Accounting, who clicks on anything that looks like an invoice.

Availability bias pulls us toward the dramatic. Workshop discipline pulls us back to reality.

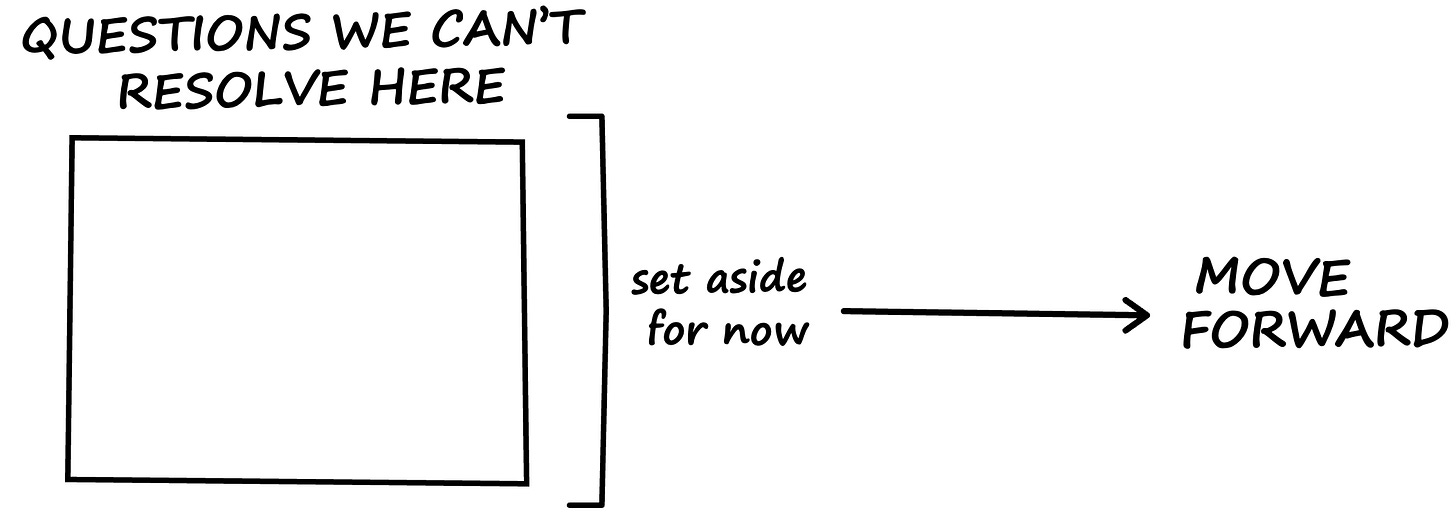

Survival Skill 6

Bracket and move on

Every so often a workshop takes a philosophical turn. Someone will say:

“Nothing is truly knowable.”

“Why model a breach at all? The world might end tomorrow.”

“What if the real threat is something we have not imagined yet?”

Technically, none of these is wrong. They are simply not helpful.

This is where bracketing is your friend. It suspends judgment on the unresolvable questions so you can focus on what is actionable today.

My go-to line:

“You are right that anything could happen. For the purpose of this exercise, let us bracket that. Given what we know, today, what is the most plausible and decision useful scenario we can build?”

It validates the concern and keeps the session moving.

What You Do Next

Next workshop you run, use this mental checklist to keep things on track.

Eliminate the impossible.

Don’t debate philosophy when judgment will do.Prioritize the plausible.

Possible is cheap. Attention is not.Favor simpler explanations.

Start with what requires fewer assumptions.Watch for “cool threat” bias.

Interesting is not the same as likely.Bracket what we can’t resolve here.

Set it aside and keep moving.Aim attention at the risks that matter.

The rest can wait.

When someone takes responsibility for the room, the workshop usually does what it’s supposed to do.

📚 What I’m Reading

Here are a few pieces I’ve really enjoyed the last few weeks

How to Model Enterprise Operational Risk | Graeme Keith

I treat Graeme’s pieces the same way my wife treats her Sunday New York Times crossword. When one appears in my feed, I save it for the right window so I can really think and absorb it. Thoughtful and worth your full attention.

Six Common Challenges in Cyber Risk Quantification | Prometheus Yang

A useful summary of the organizational friction points that quietly derail CRQ programs. Always helpful to see how others frame the same challenges we face.

Against the Gods | Peter Bernstein (re-read)

I revisited parts of this classic while finishing the early chapters of the book. Bernstein’s storytelling about probability, uncertainty, and the invention of risk still holds up. A rewarding slow burn.

❓ Reader Question (via LinkedIn)

“I’m comfortable doing one-off quantitative analyses, but leadership keeps asking why we don’t have a single “cyber risk number” for the whole organization. Is that a reasonable expectation, or a misunderstanding of what CRQ can actually do?”

My Answer

This question comes up a lot, especially once leadership starts to see value in quantitative analysis.

The short answer is that a single enterprise-wide cyber risk number is usually the wrong abstraction, even if the question behind it is reasonable.

What leaders are really asking is to understand scale. Are we talking about hundreds of thousands, tens of millions, or something that could threaten the business? That’s a fair question, and CRQ can absolutely help answer it.

Where things go wrong is when that curiosity gets collapsed into a single number.

In practice, cyber risk does not behave like one thing. It behaves like a portfolio of distinct loss scenarios: ransomware, data breaches, operational outages, fraud, regulatory action. Each has different drivers, different controls, different time horizons, and different decision levers. Rolling all of that into a single figure hides trade-offs, masks opportunity costs, and makes capital conversations harder, not easier.

What I’ve found works far better is a small, enterprise-wide portfolio of material loss scenarios, usually six to ten scenarios, expressed in financial terms and tied directly to real decisions. That structure makes it possible to have meaningful capital conversations: how much loss the organization could absorb, how much is transferred through insurance, and where coverage stops short.

That nuance matters, because not all cyber losses are insured, and not all policies respond the same way. Ransom payments, business interruption, regulatory fines, legal costs, and recovery expenses behave very differently under coverage. Collapsing all of that into a single number hides exactly the trade-offs leaders need to see.

If someone insists on a single number, I’m careful about how it’s framed. At best, it’s a rough summary of exposure, not a scorecard, target, or KPI. The moment it becomes something you try to optimize directly, it starts obscuring more than it reveals.

In my experience, once leaders see the portfolio view, they stop asking for a single number. Not because it’s impossible to calculate, but because they can finally see what’s driving risk, where money changes outcomes, and what trade-offs they’re being asked to make.

That’s a much more useful conversation.

✉️ Contact

Have a question about this, or anything else? Here’s how to reach me:

Reply to this newsletter if reading via email

Comment below

Connect with me on LinkedIn

Elsewhere

I share shorter thoughts on risk, metrics, and decision-making on LinkedIn.

Book updates, chapter summaries, tools, and downloads are at

www.heatmapstohistograms.comMy longer-form essays and older writing live at www.tonym-v.com

❤️ How You Can Help

✅ Tell me what topics you want covered: beginner, advanced, tools, AI use, anything

✅ Forward this to a colleague who’s curious about CRQ

✅ Click the ❤️ or comment if you found this useful

If someone forwarded this to you, please subscribe to get future issues.

Thanks for reading.

—Tony