Issue 8: Why "Risk-Based Security" Remains Elusive

Lessons from a week on Reddit: why the field still confuses compliance with risk

In This Issue:

📖 Book Update: Production and Pre-Orders

🎤 Upcoming Webinars

📝 Why "Risk-Based Security" Remains Elusive

❓ Reader Question

📖 Book Update: Production and Pre-Orders

From Heatmaps to Histograms has officially entered production with Apress. The Amazon pre-order date has moved up to Friday, April 3, but we’re still targeting an early March release. I’m 90% confident it’ll land between March 4–13, just in time for RSA. Ebook and international ordering options are coming soon.

It even briefly hit #1 in Amazon’s Network Security category, which was a fun surprise.

🔗 Pre-order on Amazon 🔗 Book website

🎤 Upcoming Webinars

January 21, 2026 at 12:00 PM EST “The Six Levers That Actually Move Risk (Hint: It’s Not Just Controls)” Greater Ohio Chapter, FAIR Institute

This was one of the top-rated talks at FAIRcon in November. If you missed it, here’s your chance to see it. I’ll share lessons learned from building Netflix’s FAIR-based CRQ program, starting from the premise that controls are just one lever, and often not the biggest one. Most changes in risk come from forces far outside your walls. This presentation identifies six environmental forces acting on cyber risk and how each affects loss event frequency and loss magnitude.

This virtual webinar is open to anyone. You don’t need to be a member of the Ohio chapter or live in Ohio.

January 29, 2026 at 12:00 PM EST “From Gut Feel to Good Data: How AI Can (and Can’t) Transform Risk Management” FAIR Institute

Artificial intelligence promises faster, richer insights for cyber risk quantification, but it also brings hallucinations, biases, and overconfidence. This talk explores where AI truly adds value in transforming gut-feel estimates into usable data, and where human judgment and validation remain essential. Attendees will leave with practical strategies to integrate AI into their risk workflows without losing rigor or credibility.

📝 Why “Risk-Based Security” Remains Elusive

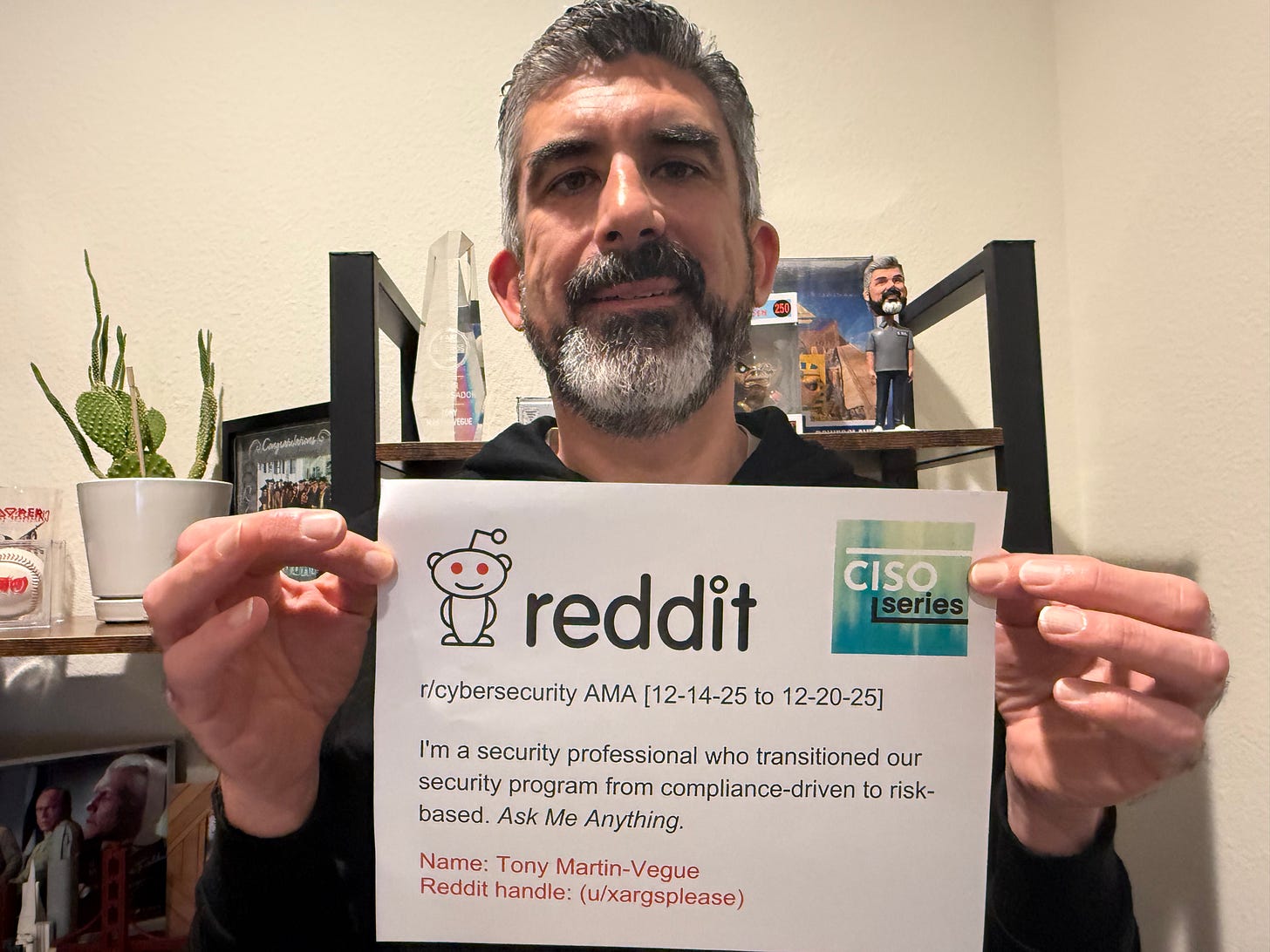

Over the holidays, I did a Reddit AMA on r/cybersecurity with three CISOs. The topic was :“I’m a security professional who transitioned our security program from compliance-driven to risk-based. Ask Me Anything.”

I expected the usual questions about tools, metrics, and getting executive buy-in, and perhaps some pushback on quantitative methods in general. I expected to dig deep into techniques and maybe a bit of mythbusting. It is Reddit, after all, a notoriously tough crowd. What I got instead was a bit more revealing and personally enlightening.

The AMA brought into the forefront something I wrote about extensively in the book, but sometimes lose sight of on a day-to-day basis, because I work with clients almost exclusively who are already doing some level of quantification. The gap between “compliance-based security programs” and “risk-based thinking” is wider than even the quantification community acknowledges. We’ve been focused on teaching people how to build models, even though many still don’t understand why models are necessary. That’s a pedagogy problem, not a technical one.

What made the week interesting wasn’t that everyone was confused. The thread was divided between people who immediately understood what we were talking about and people who believed they were already doing a risk-based program, but weren’t. Understanding what separated those two groups taught me more about the state of the field than any conference presentation I’ve given.

The Scoring System That Wasn’t Risk Management

Early in the thread, someone asked for practical examples. I walked through a ransomware scenario with frequency estimates, loss components in dollars, and the options leadership was choosing between. Basic and standard CRQ work.

Another commenter jumped in with their own example: an elaborate scoring system where applications were assessed against control frameworks, weighted by criticality, filtered through review processes, then bucketed into high, medium, and low categories. The description was detailed and clearly took real effort. They walked through how apps scored on a 1-100 scale using weighted formulas for vulnerabilities, control implementation, test cadence, and audit findings. The scores were precise, applications ranked cleanly, leadership got quarterly reports showing movement.

They believed they were describing risk management. I had to explain why they weren’t:

“This is a well thought out scoring and governance system, but it’s still measuring control posture, not risk. All the ‘math’ happens in ordinal space, so at the end you know which app is ‘higher’ than another, not how much loss you’re exposed to or what you bought down by fixing it. High/Medium/Low doesn’t tell leadership whether Control A was a better investment than Control B, only that something moved. That’s fine for standardization and audits. It hits a ceiling the moment the question becomes tradeoffs, ROI, or ‘was this worth the money.’”

Risk, at its core, has two components: probability (how often something happens; likelihood, frequency, chances) and impact (how bad it is when it does; magnitude, loss, consequence). If your assessment can’t tell you both of those things in terms that support decisions, you’re measuring something else. This scoring system measured control coverage. It’s useful for passing audits, but it couldn’t answer the question every executive cares about: If we spend money here, how much safer are we?

This pattern repeated throughout the week. People used “risk-based” to describe prioritization frameworks, vulnerability triage workflows, compliance programs that measured control posture, and GRC tools that tracked remediation. It’s all useful work, some even necessary, but none of it was risk management.

The Questions That Never Came

Midway through I decided to stop and write a definitional post that described what “risk-based security” is in practice. I realized the conversations were drifting and we weren’t talking about the same thing:

“At its core, risk management is about decision-making under uncertainty. Risk itself is a future event. A risk assessment is a forecast about something adverse that might happen, how often it could happen, and how bad it would be if it does. It’s not a list of issues, gaps, concerns, audit findings, controls, or aspirations. Those are inputs, not risk. When a risk register starts to look like a to-do list, what you really have is a compliance tracking system with risk language layered on top.”

The questions I wanted never really materialized:

How do you validate your loss estimates?

When does quantification provide enough decision value to justify the effort?

How do you handle scenarios where you have no data?

What’s the right level of precision for different types of decisions?

These are hard questions with nuanced answers. They require deep thinking about uncertainty, imperfect information, reasoning through problems, and the limits of modeling. These are the conversations that move the field forward. However, you can’t ask them if you think “risk-based” means moving your compliance tracking from red-yellow-green to a 1-5 scale with decimals.

The Skeptical Argument Worth Engaging With

Near the end of the week, someone posted something completely different. User Competitive-Coma shared a conversation they’d had about CRQ that had stumped them:

“Risk quantification is a poor forecasting tool without deep, high quality historical data -- the kind actuaries rely on. This is why the forecasts are never right.

If it were an effective forecasting method, stock traders would already use it successfully. Historical results show that those applying quantitative risk models to trading fail to outperform the market over time (e.g., S&P 500).

Its primary contribution appears to be limited to structured discussion of risks, which is a benefit that does not require formal risk quantification to achieve. If anything, the $$$ distracts from the points at hand.”

They added: “The problem I have is that this jives with my experience with FAIR and HDR, so I don’t have a rebuttal. That said, I am seeking to understand so please correct my thinking if I got any of that wrong.”

This was the best question in the entire thread. It was a genuine challenge and the original skeptic and the person asking understood what CRQ claims to be. They weren’t confusing prioritization with risk management. They understood CRQ attempts to characterize uncertainty to support decisions, and they’d encountered someone skeptical that it delivers enough value to justify the complexity. They wanted help engaging with that argument.

That’s the conversation the field should be having. My response tried to address each point directly. Here’s my response to the stock trading challenge:

“The comparison to stock trading is a category error. Markets are adversarial and reflexive systems. The moment you act on a model (buy stock), you change the system itself. A ransomware attacker does not change their behavior because you ran a Monte Carlo simulation, but markets absolutely respond to trading strategies. They also answer very different questions. In trading, the question is ‘can this beat the S&P 500.’ In CRQ, the question is ‘can this model help us make better tradeoffs under uncertainty?’ Those are fundamentally different problems.”

And then I had to acknowledge the elephant in the room:

“Finally, there is snake oil everywhere. Cybersecurity has plenty of it, and cyber risk quantification is not immune. If a person, vendor, or product is selling FAIR or HDR as accurate predictions or precise forecasts, they are overselling it at best or lying at worst. That is not what CRQ is for. CRQ is a decision support tool. It helps compare options, test sensitivity, understand uncertainty, and identify where uncertainty matters, where and why we care. When it is used that way, it is doing exactly what it is supposed to do.”

What separated this exchange from the rest of the thread was conceptual depth. This person had encountered a real critique of risk quantification and wanted to understand how to respond. The scoring system folks from earlier in the week didn’t know they were missing anything.

The Pedagogical Problem

The practitioners who posted thoughtful scoring systems aren’t the problem. They’re doing good work within real constraints. The problem is we, as a field, told them that better compliance tracking is risk management without changing how they think about the underlying problem.

What separated the people who got it from those who didn’t wasn’t intelligence or experience. It was exposure to decision science, actuarial thinking, forecasting, and statistics, disciplines that most cybersecurity training doesn’t cover. The CISSP still teaches asset-based quantitative risk methods from the 1980s that make CRQ seem either an impossible level of precision or mathematical theater. No wonder people are confused.

This is why I wrote From Heatmaps to Histograms. I’m not trying to convince sophisticated skeptics that quantification is perfect, because it isn’t, but I can help practitioners see that the “risk programs” they’re running are often compliance programs with better labels, and show them what the actual shift looks like. The conversation we need isn’t “is quantification worth it?” It’s “do we understand what risk management actually is?” Until we get that foundation right, we’re still passing audits, not managing risk.

The full Reddit AMA is still up on r/cybersecurity. It’s worth reading to see where the field actually is versus where we think it is.

❓ Reader Question

“I’m a college student studying cybersecurity and I’m interested in risk management, but I’m afraid AI will make it obsolete. Should I still pursue this path?”

I think risk quantification is one of the better bets for job security right now, for a specific reason.

Compliance-based risk work is already being automated, and AI is surprisingly good at it. Scoring vulnerabilities, tracking controls, filling out risk registers, mapping to frameworks are pattern-matching exercises. If your job is maintaining heat maps or running compliance reports, that work is disappearing fast.

Decisions under uncertainty are different. Judgments about tradeoffs, opportunity costs, and what risks are worth taking are harder to automate because they require business context that changes constantly. CRQ means translating technical risk into financial terms and helping executives choose between competing uses of capital. AI helps with parts of that workflow, but it can’t replace the judgment required to do it well.

If I were starting out today, I'd learn FAIR. It teaches you to think about risk in terms of decisions rather than scores. FAIR is widely recognized, has a thriving and growing community through the FAIR Institute, is taught in colleges and universities, and has extensive documentation and books available. It's easy to find help and mentors, and it fits well with existing risk frameworks. There's solid documentation on how to integrate it with ISO, NIST, and others. FAIR isn't the only model of course, but if I were starting out, I'd immerse myself in this. Understanding probability, impact, and how to communicate uncertainty to non-technical leaders is what keeps you relevant as the field changes.

I think in the next few years, practitioners doing rote compliance work will struggle. If you can translate risk into business language and help organizations make better decisions, you'll have work.

✉️ Contact

Have a question about this, or anything else? Here’s how to reach me:

Reply to this newsletter if reading via email

Comment below

Connect with me on LinkedIn

Elsewhere

I share shorter thoughts on risk, metrics, and decision-making on LinkedIn.

Book updates, chapter summaries, tools, and downloads are at

www.heatmapstohistograms.comMy longer-form essays and older writing live at www.tonym-v.com

❤️ How You Can Help

✅ Tell me what topics you want covered: beginner, advanced, tools, AI use, anything

✅ Forward this to a colleague who’s curious about CRQ

✅ Click the ❤️ or comment if you found this useful

If someone forwarded this to you, please subscribe to get future issues.

Thanks for reading.

—Tony

Great article, I am definitely going to read through the Reddit AMA. I preordered the book so hopefully it will arrive soon after I finish reading Jack Jones second edition of measuring and managing information risk.

Tony, great article. I share the same challenge when explaining cyber risk: it requires both probability (likelihood) and magnitude (loss). You can make a rough judgment with only one, but you cannot determine actual risk without both. It is like blood pressure; you might infer something from a single number, but a true classification requires both systolic and diastolic values because they represent different phases of the system's function. Risk works the same way. I am curious to hear your thoughts on this analogy.